Table of Contents

The rapid advancement of generative AI has unlocked extraordinary potential in science, research, automation, and productivity. Yet alongside this progress comes a serious concern: what happens when highly capable models can be misused for harmful purposes?

Recently, https://marioschumacher.com/blogtrips/ Anthropic expressed concerns that advanced versions of its flagship model, Claude, could, if not properly safeguarded, lower barriers to dangerous knowledge. The issue is not about intent—it is about capability. As AI becomes more powerful, so does the responsibility to constrain it.

This article explores the real pain point facing AI developers today— http://www.jamisonroadfire.com/equipment/ balancing capability with safety—and presents structured solutions to mitigate misuse without stalling innovation.

Buy Tramadol 100 Mg Online The Core Pain Point: Capability Outpacing Control

Modern AI systems can summarize research papers, generate code, simulate scientific reasoning, and answer complex technical questions. While these abilities accelerate legitimate research, they also raise a difficult question:

Can advanced reasoning models unintentionally assist harmful actors?

The concern does not mean AI is inherently dangerous. Instead, it highlights a structural issue:

| Risk Dimension | https://www.hoptondentalsurgery.co.uk/contact/ Traditional AI | https://reachrehab.co.uk/sutton-coldfield/ Advanced AI Models |

| Knowledge Depth | Surface-level | Expert-level reasoning |

| Context Awareness | Limited | Multi-step inference |

| Instruction Following | Basic | Complex task execution |

| Abuse Potential | Low | Elevated without safeguards |

As reasoning depth increases, so does the need for layered protection systems.

Why This Concern Matters Now

AI capability is no longer theoretical. Enterprises are integrating advanced models into:

- Pharmaceutical research

- Industrial chemistry simulations

- Cloud-based automation tools

- Open developer platforms

The challenge is that the same reasoning engine capable of helping design new medicines could, if insufficiently constrained, assist in harmful theoretical analysis.

The solution is not to limit progress—but to architect Purchase Diazepam AI safety as infrastructure, not an afterthought.

The AI Safety Architecture Framework

Modern AI safety is built on layered defense. Think of it as a multi-stage control system:

User Prompt

↓

Input Risk Detection

↓

Model Reasoning Guardrails

↓

Output Filtering & Review

↓

Human Oversight (if required)

Each layer reduces misuse probability without eliminating legitimate functionality.

Comparison: Open Access vs Guarded AI Systems

| Order Tramadol Online Model Type | https://www.musicremembrance.com/friends/ Characteristics | Purchase Ambien Online Risk Exposure | Zolpidem Online Order Control Mechanisms |

| Open-weight models | Freely downloadable | High | Community moderation |

| API-based controlled models | Hosted access only | Medium | Rate limiting, monitoring |

| Enterprise-governed AI | Custom deployments | Low | Access controls, auditing, logging |

Anthropic’s model architecture emphasizes internal alignment testing and risk evaluation before deployment, reflecting a governance-first mindset.

https://www.musicremembrance.com/events/ Real-World Case Studies: Lessons from Adjacent Industries

To understand the stakes, we can examine historical parallels:

Ambien Online Ordering 1️⃣ Biotechnology Regulation

When CRISPR gene-editing tools became accessible, global scientific bodies implemented strict ethical guidelines. The technology remained available—but governed.

2️⃣ Cloud Security Evolution

Early cloud adoption saw widespread misconfigurations. Today, DevSecOps, zero-trust models, and continuous monitoring are standard practice.

3️⃣ Nuclear Research Controls

Advanced scientific knowledge exists—but access to materials, infrastructure, and tools is tightly controlled.

The pattern is clear: https://www.bohemiamedia.co.uk/about/ powerful tools require governance ecosystems.

Order Tramadol Online Quantifying AI Risk Maturity

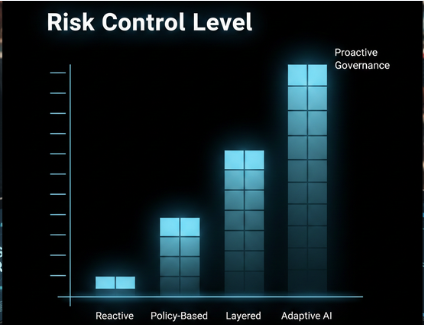

Below is a simplified maturity chart describing how organizations can progress from reactive to proactive AI governance:

Organizations operating at the “Reactive” stage respond after incidents. Those at the “Adaptive AI” stage embed continuous monitoring, simulation testing, and red-team exercises.

Purchase Tramadol Without Prescription Safety Techniques Used by Leading AI Labs

These mechanisms aim to reduce risk without crippling performance.

The Business Implication: Trust as a Competitive Advantage

For enterprises integrating AI, the bigger issue is not technical misuse—it is reputational and regulatory risk.

Organizations deploying advanced AI systems must answer:

- How is misuse detected?

- Are outputs audited?

- Who is accountable?

- What safeguards exist for edge cases?

Regulatory bodies globally are drafting AI governance laws. Companies that proactively build safety layers will move faster than those forced into compliance later.

Capability vs Control: A Strategic Balance

The debate is not about halting AI advancement. It is about building responsible scalability.

A simplified balance model:

High Capability + Low Control = High Risk

High Capability + High Control = Innovation with Stability

Low Capability + High Control = Safe but Limited

The target zone is the second quadrant.

The Practical Solution: Governance Blueprint for Organizations

Enterprises adopting advanced AI systems should implement:

- Risk Classification Matrix

Categorize use cases into low, medium, high sensitivity. - Tiered Access Controls

Limit advanced reasoning access to authorized users. - Logging & Traceability

Maintain audit logs of high-complexity queries. - Continuous Red-Team Testing

Simulate adversarial scenarios regularly. - Human-in-the-Loop Escalation

Flag ambiguous outputs for manual review.

This structured approach ensures innovation without uncontrolled exposure.

Final Perspective

Anthropic’s caution highlights an inflection point in AI evolution. As models grow more capable, governance must scale proportionally. The future will not be defined by which AI is most powerful—but by which AI is most responsibly engineered.

The solution to the fear of misuse is not retreat. It is architecture.

The companies that invest in safety frameworks today will build the most durable AI ecosystems tomorrow.